Industries

By: Mike Horrocks The other day in the American Banker, there was an article titled “Is Loan Growth a Bad Idea Right Now?”, which brings up some great questions on how banks should be looking at their C&I portfolios (or frankly any section of the overall portfolio). I have to admit I was a little down on the industry, for thinking the only way we can grow is by cutting rates or maybe making bad loans. This downer moment required that I hit my playlist shuffle and like an oracle from the past, The Clash and their hit song “Should I stay or should I go”, gave me Sage-like insights that need to be shared. First, who are you listening to for advice? While I would not recommend having all the members of The Clash on your board of directors, could you have maybe one. Ask yourself are your boards, executive management teams, loan committees, etc., all composed of the same people, with maybe the only difference being iPhone versus Android?? Get some alternative thinking in the mix. There is tons of research to show this works. Second, set you standards and stick to them. In the song, there is a part where we have a bit of a discussion that goes like this. “This indecision's buggin' me, If you don't want me, set me free. Exactly whom I'm supposed to be, Don't you know which clothes even fit me?” Set your standards and just go after them. There should be no doubt if you are going to do a certain kind of loan or not based on the pricing. Know your pricing, know your limits, and dominate that market. Lastly, remember business cycles. I am hopeful and optimistic that we will have some good growth here for a while, but there is always a down turn…always. Again from the lyrics – “If I go there will be trouble, An' if I stay it will be double” In the American Banker article, M&T Bank CFO Rene Jones called out that an unnamed competitor made a 10-year fixed $30 million dollar loan at a rate that they (M&T) just could not match. So congrats to M&T for recognizing the pricing limits and maybe congrats to the unnamed bank for maybe having some competitive advantage that allowed them to make the loan. However if there is not something like that supporting the other bank…the short term pain of explaining slower growth today may seem like nothing compared to the questioning they will get if that portfolio goes south. So in the end, I say grow – soundly. Shake things up so you open new markets or create advantages in your current market and rock the Casbah!

A behind-the-wheel look at alternative-power vehicles:

Tax return fraud: Using 3rd party data and analytics to stay one step ahead of fraudsters By Neli Coleman According to a May 2014 Governing Institute research study of 129 state and local government officials, 43 percent of respondents cited identity theft as the biggest challenge their agency is facing regarding tax return fraud. Nationwide, stealing identities and filing for tax refunds has become one of the fastest-growing nonviolent criminal activities in the country. These activities are not only burdening government agencies, but also robbing taxpayers by preventing returns from reaching the right people. Anyone who has access to a computer can fill out an income-tax form online and hit submit. Most tax returns are processed and refunds released within a few days or weeks. This quick turnaround doesn’t allow the government time to fully authenticate all the elements submitted on returns, and fraudsters know how to exploit this vulnerability. Once released, these monies are virtually untraceable. Unfortunately, simply relying on business rules based on past behaviors and conducting internal database checks is no longer sufficient to stem the tide of increasing tax fraud. The use of a risk-based identity-authentication process coupled with business-rules-based analysis and knowledge-based authentication tools is critical to identifying fraudulent tax returns. The ability to perform non-traditional checks that go beyond the authentication of the individual to consider the methods and devices used to perpetrate the tax-refund fraud further strengthens the tax-refund fraud-detection process. The inclusion of multiple non-traditional checks within a risk-based authentication process closes additional loopholes exploited by the tax fraudster, while simultaneously decreasing the number of false positives. Experian’s Tax Return Analysis PlatformSM provides both the verification of identity and the risk-based authentication analytics that score the potential fraud risk of a tax return along with providing specific flags that identify the return as fraudulent. Our data and analytics are a product of years of expertise in consumer behavior and fraud detection along with unique services that detect fraud in the devices being used to submit the returns and identity credentials that have been used to perpetrate fraud in financial transactions outside of tax. Together, the combination of rules-based and risk-based income-tax-refund fraud-detection protocols can curb one of the fastest-growing nonviolent criminal activities in the country. With identity theft reaching unprecedented levels, government agencies need new technologies and processes in place to stay one step ahead of fraudsters. In a world where most transactions are conducted in virtual anonymity, it is difficult, but not impossible, to keep pace with technological advances and the accompanying pitfalls. A combination of existing business rules based on authentication processes and risk-based authentication techniques provided through third-party data and analytics services create a multifaceted approach to income-tax-refund fraud detection, which enables revenue agencies to further increase the number of fraudulent returns detected. Every fraudulent return that is identified and unpaid, improves the government’s ability to continue to meet the demand for services by its constituents while at the same time strengthening the public’s trust in the tax system.

Source: IntelliViewsm powered by Experian Sales of existing homes dropped 50% from the peak in August 2005 to the low point in July 2010. The spike in home sales in late 2009 and early 2010 was due to the large number of foreclosure sales as well as very low prices. Since 2010, sales have increased to almost to the level they were in 2000, before the financial crisis. However, the homeownership rate has steadily gone down. How could sales have picked up while the homeownership rate declined? Investors have entered the market snapping up single family homes and renting them. Therefore, the recent good news in the existing home market has been driven by investors, not homeowners. But as I point out below, this is changing. Looking at the homeownership rate by age, shown in the table below, it is clear that since the crisis the rate has declined most for people under 45. The potential for marketing is greatest in this cohort as the numbers indicate a likely demand for housing. Homeownership Rate by Age Source: U.S. Census Bureau and Haver Analytics as reported on the Federal Reserve Bank of St. Louis Fred database The factors that have impeded growth, described above, are beginning to reverse which, along with pent-up demand, will present an opportunity for mortgage originators in 2015. Home prices have risen in 246 of the 277 cities tracked by Clear Capital.With prices going up, investors have begun to back away from the market, resulting in prices increasing at a slower rate in some cities but they are still increasing.Therefore the perception that homeownership is risky will likely change.In fact, in some areas, such as California’s coastal cities, sales are strong and prices are going up rapidly. Lenders and regulators are recognizing that the stringent guidelines put in place in reaction to the crisis have overly constrained the market.Fannie Mae and Freddie Mac are reducing down payment requirements to as low as 3%.FHA is lowering their guarantee fee, reducing the amount of cash buyers need to close transactions.Private securitizations, which dried up completely, are beginning to reappear, especially in the jumbo market. As unemployment continues to go down, consumer confidence will rise and household formation will return to more normal levels which result in more sales to first time homebuyers, who drive the market.According to Lawrence Yun, chief economist for the National Association of Realtors, “…it’s all about consistent job growth for a prolonged period, and we’re entering that stage.” The number of houses in foreclosure, according to RealtyTrac, has fallen to pre-crisis levels.This drag on the market has, for the most part, cleared and as prices continue to inflate, potential buyers will be motivated to buy before homes become unaffordable.Despite the recent increases, home prices are still, on average, 23% lower than they were at the peak. Focusing marketing dollars on those people with the highest propensity to buy has always been a challenge but in this market there are identifiable targets. “Boomerangs” are people who owned real estate in the past but are currently renting and likely to come back into the market.Marketing to qualified former homeowners would provide a solid return on investment. People renting single family houses are indicating a lifestyle preference that can be marketed to. Newly-formed households are also profitable targets. The housing market, at long last, appears to be finally turning the corner and normalizing. Experian’s expertise in identifying the right consumers can help lenders to pinpoint the right people on whom marketing dollars should be invested to realize the highest level of return. Click here to learn more.

Cont. Understanding Gift Card Fraud By: Angie Montoya In part one, we spoke about what an amazing deal gift cards (GCs) are, and why they are incredibly popular among consumers. Today we are going to dive deeper and see why fraudsters love gift cards and how they are taking advantage of them. We previously mentioned that it’s unlikely a fraudster is the actual person that redeems a gift card for merchandise. Although it is true that some fraudsters may occasionally enjoy a latte or new pair of shoes on us, it is much more lucrative for them to turn these forms of currency into cold hard cash. Doing this also shifts the risk onto an unsuspecting victim and off of the fraudster. For the record, it’s also incredibly easy to do. All of the innovation that was used to help streamline the customer experience has also helped to streamline the fraudster experience. The websites that are used to trade unredeemed cards for other cards or cash are the same websites used by fraudsters. Although there are some protections for the customer on the trading sites, the website host is usually left holding the bag when they have paid out for a GC that has been revoked because it was purchased with stolen credit card information. Others sites, like Craigslist and social media yard sale groups, do not offer any sort of consumer protection, so there is no recourse for the purchaser. What seems like a great deal— buying a GC at a discounted rate— could turn out to be a devalued Gift card with no balance, because the merchant caught on to the original scheme. There are ten states in the US that have passed laws surrounding the cashing out of gift cards. * These laws enable consumers to go to a physical store location and receive, in cash, the remaining balance of a gift card. Most states impose a limit of $5, but California has decided to be a little more generous and extend that limit to $10. As a consumer, it’s a great benefit to be able to receive the small remaining balance in cash, a balance that you will likely forget about and might never use, and the laws were passed with this in mind. Unfortunately, fraudsters have zeroed in on this benefit and are fully taking advantage of it. We have seen a host of merchants experiencing a problem with fraudulently obtained GCs being cashed out in California locations, specifically because they have a higher threshold. While five dollars here and ten dollars there does not seem like it is very much, it adds up when you realize that this could be someone’s full time job. Cashing out three ten dollar cards would take on average 15 minutes. Over the course of a 40-hour workweek it can turn into a six-figure salary. At this point, you might be asking yourself how fraudsters obtain these GCs in the first place. That part is also fairly easy. User credentials and account information is widely available for purchase in underground forums, due in part to the recent increase in large-scale data breaches. Once these credentials have been obtained, they can do one of several things: Put card data onto a dummy card and use it in a physical store Use credit card data to purchase on any website Use existing credentials to log in to a site and purchase with stored payment information Use existing credentials to log in to an app and trigger auto-reloading of accounts, then transfer to a GC With all of these daunting threats, what can a merchant do to protect their business? First, you want to make sure your online business is screening for both the purchase and redemption of gift cards, both electronic and physical. When you screen for the purchase of GCs, you want to look for things like the quantity of cards purchased, the velocity of orders going to a specific shipping address or email, and velocity of devices being used to place multiple orders. You also want to monitor the redemption of loyalty rewards, and any traffic that goes into these accounts. Loyalty fraud is a newer type of fraud that has exploded because these channels are not normally monitored for fraud— there is no actual financial loss, so priority has been placed elsewhere in the business. However, loyalty points can be redeemed for gift cards, or sold on the black market, and the downstream affect is that it can inconvenience your customer and harm your brand’s image. Additionally, if you offer physical GCs, you want to have a scratch off PIN on the back of the card. If a GC is offered with no PIN, fraudsters can walk into a store, take a picture of the different card numbers, and then redeem online once the cards have been activated. Fraudsters will also tumble card numbers once they have figured out the numerical sequence of the cards. Using a PIN prevents both of these problems. The use of GCs is going to continue to increase in the coming years— this is no surprise. Mobile will continue to be incorporated with these offerings, and answering security challenges will be paramount to their success. Although we are in the age of the data breach, there is no reason that the experience of purchasing or redeeming a gift card should be hampered by overly cautious fraud checks. It’s possible to strike the right balance— grow your business securely by implementing a fraud solution that is fraud minded AND customer centric. *The use of GC/eGC is used interchangeably

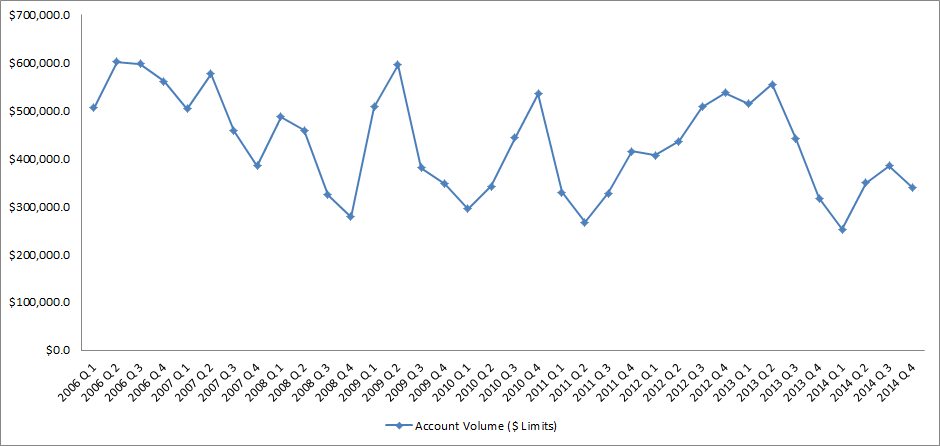

End-of-Draw approaching for many HELOCs Home equity lines of credit (HELOCs) originated during the U.S. housing boom period of 2006 – 2008 will soon approach their scheduled maturity or repayment phases, also known as “end-of-draw”. These 10 year interest only loans will convert to an amortization schedule to cover both principle and interest. The substantial increase in monthly payment amount will potentially shock many borrowers causing them to face liquidity issues. Many lenders are aware that the HELOC end-of-draw issue is drawing near and have been trying to get ahead of and restructure this debt. RealtyTrac, the leading provider of comprehensive housing data and analytics for the real estate and financial services industries, foresees this reset risk issue becoming a much bigger crisis than what lenders are expecting. There are a large percentage of outstanding HELOCs where the properties are still underwater. That number was at 40% in 2014 and is expected to peak at 62% in 2016, corresponding to the 10 year period after the peak of the U.S. housing bubble. RealtyTrac executives are concerned that the number of properties with a 125% plus loan-to-value ratio has become higher than predicted. The Office of the Comptroller of the Currency (OCC), the Board of Governors of the Federal Reserve System, the Federal Deposit Insurance Corporation, and the National Credit Union Administration (collectively, the agencies), in collaboration with the Conference of State Bank Supervisors, have jointly issued regulatory guidance on risk management practices for HELOCs nearing end-of-draw. The agencies expect lenders to manage risks in a disciplined manner, recognizing risk and working with those distressed borrowers to avoid unnecessary defaults. A comprehensive strategic plan is vital in order to proactively manage the outstanding HELOCs on their portfolio nearing end-of-draw. Lenders who do not get ahead of the end-of-draw issue now may have negative impact to their bottom line, brand perception in the market, and realize an increase in regulatory scrutiny. It is important for lenders to highlight an awareness of each consumer’s needs and tailor an appropriate and unique solution. Below is Experian’s recommended best practice for restructuring HELOCs nearing end-of-draw: Qualify Qualify consumers who have a HELOC that was opened between 2006 and 2008 Assess Viability Assess which HELOCs are idea candidates for restructuring based on a consumer’s Overall debt-to-income ratio Combined loan-to-value ratio Refine Offer Refine the offer to tailor towards each consumer’s needs Monthly payment they can afford Opportunity to restructure the debt into a first mortgage Target Target those consumers most likely to accept the offer Consumers with recent mortgage inquiries Consumers who are in the market for a HELOC loan Lenders should consider partnering with companies who possess the right toolkit in order to give them the greatest decisioning power when restructuring HELOC end-of-draw debt. It is essential that lenders restructure this debt in the most effective and efficient way in order to provide the best overall solution for each individual consumer. Revamp your mortgage and home equity acquisitions strategies with advanced analytics End-of-draw articles

By: Kyle Enger, Executive Vice President of Finagraph Small business remains one of the largest and most profitable client segments for banks. They provide low cost deposits, high-quality loans and offer numerous cross-selling opportunities. However, recent reports indicate that a majority of business owners are dissatisfied with their banking relationship. In fact, more than 33 percent are actively shopping for a new relationship. With limited access to credit after the worst of the financial crisis, plus a lack of service and attention, many business owners have lost confidence in banks and their bankers. Before the financial crisis, business owners ranked their banker number three on the list of top trusted advisors. Today bankers have fallen to number seven – below the medical system, the president and religious organizations, as reported in a recent Gallup poll, “Confidence in Institutions.” In order to gain a foothold with existing clients and prospects, here is a roadmap banks can use to build trust and effectively meet the needs to today’s small business client. Put feet on the street. To rebuild trust, banks need to get in front of their clients face to face and begin engaging with them on a deeper level. Even in the digital age, business customers still want to have face-to-face contact with their bank. The only way to effectively do that is to put feet on the street and begin having conversations with clients. Whether it be via Skype, phone calls, text, e-mail or Twitter – having knowledgeable bankers accessible is the first step in creating a trusting relationship. Develop business acumen. Business owners need someone who is aware of their pain points, can offer the correct products according to their financial need, and can provide a long-term plan for growth. In order to do so, banks need to invest in developing the business and relationship acumen of their sales forces to empower them to be trusted advisors. One of the best ways to launch a new class of relationship bankers is to start investing in educational events for both the bankers and the borrowers. This creates an environment of learning, transparency and growth. Leverage technology to enhance client relationships. Commercial and industrial lending is an expensive delivery strategy because it means bankers are constantly working with business owners on a regular basis. This approach can be time-consuming and costly as bankers must monitor inventory, understand financials, and make recommendations to improve the financial health of a business. However, if banks leverage technology to provide bankers with the tools needed to be more effective in their interactions with clients, they can create a winning combination. Some examples of this include providing online chat, an educational forum, and a financial intelligence tool to quickly review financials, provide recommendations and make loan decisions. Authenticate your value proposition. Business owners have choices when it comes to selecting a financial service provider, which is why it is important that every banker has a clearly defined value proposition. A value proposition is more than a generic list of attributes developed from a routine sales training program. It is a way of interacting, responding and collaborating that validates those words and makes a value proposition come to life. Simply claiming to provide the best service means nothing if it takes 48 hours to return phone calls. Words are meaningless without action, and business owners are particularly jaded when it comes to false elevator speeches delivered by bankers. Never stop reaching out. Throughout the lifecycle of a business, its owner uses between 12 and 15 bank products and services, yet the national product per customer ratio averages around 2.5. Simply put, companies are spreading their banking needs across multiple organizations. The primary cause? The banker likely never asked them if they had any additional businesses or needs. As a relationship banker to small businesses, it is your duty to bring the power of the bank to the individual client. By focusing on adding value through superior customer experience and technology, financial institutions will be better positioned to attract new small business banking clients and expand wallet share with existing clients. By implementing these five strategies, you will create closer relationships, stronger loan portfolios and a new generation of relationship bankers. To view the original blog posting, click here. To read more about the collaboration between Experian and Finagraph, click here.

By: Linda Haran Complying with complex and evolving capital adequacy regulatory requirements is the new reality for financial service organizations, and it doesn’t seem to be getting any easier to comply in the years since CCAR was introduced under the Dodd Frank Act. Many banks that have submitted capital plans to the Fed have seen them approved in one year and then rejected in the following year’s review, making compliance with the regulation feel very much like a moving target. As a result, several banks have recently pulled together a think tank of sorts to collaborate on what the Fed is looking for in capital plan submissions. Complying with CCAR is a very complex, data intensive exercise which requires specialized staffing. An approach or methodology to preparing these annual submissions has not been formally outlined by the regulators and banks are on their own to interpret the complex requirements into a comprehensive plan that will ensure their capital plans are accepted by the Fed. As banks work to perfect the methodology used in this exercise, the Fed continues to fine tune the requirements by changing submission dates, Tier 1 capital definitions, etc. As the regulation continues to evolve, banks will need to keep pace with the changing nature of the requirements and continually evaluate current processes to assess where they can be enhanced. The capital planning exercise remains complex and employing various methodologies to produce the most complete view of loss projections prior to submitting a final plan to the Fed is a crucial component in having the plan approved. Banks should utilize all available resources and consider partnering with third party organizations who are experienced in both loss forecasting model development and regulatory consulting in order to stay ahead of the regulations and avoid a scenario where capital plan submissions may not be accepted. Learn how Experian can help you meet the latest regulatory requirements with our Loss Forecasting Model Services.

Do you really know where your commercial and small business clients stand financially? I bet if you ask your commercial lending relationship managers they will say they do - but do they really? The bigger question is how you could be more tied into to your business clients so that you could give them real advice that may save their businesses. More questions?? Nope, just one answer. Finagraph with Experian’s Advisor for relationship lending is a perfect setup to gather data that you currently are using within your financial institution that can then be matched that up with real financial spreads from the accounting systems that your business client use in their everyday process. By comparing the two sources of records you can get a true perspective on where your business clients stands and empower your relationship managers like ever before.

In today’s world, it seem as though there is a statistic that we can apply to just about anything. Whether it’s viewership of the Super Bowl, popularity of breakfast cereal or the number of red M&Ms that come in a pack, I bet the data is out there. In fact, there is so much data in the world that Emery Simon of the Business Software Alliance once said that if data were placed on DVDs, it would create a stack tall enough to reach the moon. But let’s take a step back. If you break it down to its bare bones, all data is, is a bunch of numbers. Until you can understand what those numbers mean, data by itself isn’t that helpful. Delivering data insights in order for our clients to make better decisions is at the core of everything we do at Experian. We are continuously looking for ways to use our data for good. This is especially critical for the automotive industry, including dealerships, manufacturers, lenders and consumers alike. For example, with data and insights, manufacturers and dealerships can better understand what vehicles consumers are purchasing, as well as where certain vehicle segments are most popular. This information can help them decide which vehicles models are performing or where to move inventory. For automotive lenders, gaining insight into the shifts in consumer payment behavior, enables them to take the appropriate action when making decisions on loan terms and interest rates. By leveraging this information, lenders are able to minimize their own risk and improve profitability. On the consumer side, a vehicle is often the second largest purchase they will make. It’s important, especially when purchasing a used vehicle, to get as much information as possible to make the best decision. Vehicle history reports contain hundreds of data points from a variety of sources that provide insight into whether a vehicle has been in an accident, has frame damage, and odometer fraud, among other things. Consumers are able to take these insights to assist in the car buying process to ensure the vehicle is safe and meets their own standards. Leveraging the information available to make better decisions across the board will help the industry and consumers cruise down the highway of success. And that’s how we roll …

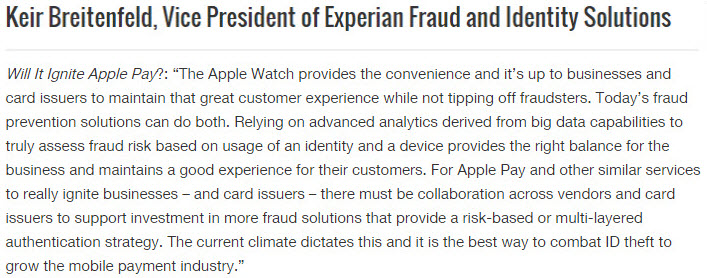

Apple Pay fraud solution Apple Pay is here and so are increased fraud exposures, confirmed losses, and customer experience challenges among card issuers. The exposure associated with the provisioning of credit and debit cards to the Apple Pay application was in time expected as fraudsters are the first group to find weaknesses. Evidence from issuers and analyst reports points to fraud as the result of established credit/debit cards compromised through data breaches or other means that are being enrolled into Apple Pay accounts – and being used to make large value purchases at large merchants. Keir Breitenfeld, our vice president of Fraud and Identity solutions said as much in a recent PYMNTS.com story where he was quoted about whether the Apple Watch will help grown Apple Pay. The challenge is that card issuers have no real controls over the provisioning or enrollment process so they currently only have an opportunity to authenticate their cardholder, but not the provisioning device. Fraud exposure can lie within call centers and online existing customer treatment channels due to: Identity theft and account takeover based on breach activity. Use of counterfeit or breached card data. Call center authentication process inadequacies. Capacity and customer experience pressures driving human error or subjectively lax due diligence. Existing customer/account authentication practices not tuned to this emerging scheme and level of risk. The good news is that positive improvements have been proven with bolstering risk-based authentication at the card provisioning process points by comparing the inbound provisioning device to the device that is on file for the cardholder account. This, in combination with traditional identity risk analytics, verifications, knowledge-based authentication, and holistic decisioning policies vastly improve the view afforded to card issuers for layered process point decisioning. Learn more on why emerging channels, like mobile payments, call for advanced fraud identification techniques.

The follow blog is by Kyle Enger, Executive Vice President of Finagraph With the surge of alternative lenders, competition among banks is stronger than ever. But what exactly does that mean for the everyday banker? It means business owners want more. If you’re only meeting your clients once a year on a renewal, it’s not good enough. In order to take your customer service to the next level, you need to become a trusted advisor. Someone who understands where your clients are going and how to help them get there. If you’re not investing in your clients’ business by taking the following actions, they may have one foot out the door. 1. Understand your clients’ business One of the biggest complaints from business owners is that bankers simply don’t understand their business. A good commercial banker should be well-versed in their borrower’s company, competitors and the industry. They should be willing to get to know their business, commit to them, stop by to check-in and provide a proactive plan to avoid future risks. 2. Utilize technology for your benefit The majority of recent bank innovations have been used to make the customer experience more convenient, but not necessarily the more helpful. We’ve seen everything from mobile remote deposit capture to online banking to mobile payments – all of which are keeping customers from interacting with the bank. Contrary to what many think, technology can be used to create strong relationships by giving bankers information about their customers to help serve them better. Using new software programs, bankers can see information like the current ratio, quick ratio, debt-to-equity, gross margin, net margin and ROI within seconds. 3. Heighten financial acumen Banks have access to a vast amount of customer financial data, but sometimes fail to use this information to its full potential. With insight into consumer purchasing behavior and business’ financial history, banks should be able to cater products and services to clients in a personalized manner. However, many lenders walk into prospect meetings without knowing much about the business. Their mode of operation is solely focused on trying to secure new clients by building rapport – they are what we call surface bankers. A good banker will educate clients on what they need to know such as equity, inventory, cash flow, retirement planning and sweep accounting. They should also know about new technology and consult borrowers on intermediate financing, terming out loans that are not revolving, or locking in with low interest rates. Following, they will bring in the right specialist to match the product according to their clients’ needs. 4. Go beyond the price Many business owners make the mistake of comparing banks based on cost, but the value of a healthy banking relationship and a financial guide is priceless. So many bankers these days are application gatherers working on a transactional basis, but that’s not what business owners need. They need to stop looking at the short-term convenience of brands, price and location, and start considering the long-term effects a trusted financial advisor can make on their business. 5. A partner in your business, not a banker “Sixty percent of businesses are misfinanced using short-term money for long-term use,” according to the CEB Business Banking Board. In other words, there are many qualified candidates in need of a trusting banker to help them succeed. Unbeknownst to many business owners, bankers actually want to make loans and help their clients’ business grow. Making this known is the baseline in building a strong foundation for the future of your career. Just remember to ask yourself – am I being business-centric or bank-centric? To view the original blog posting, click here. To read more about the collaboration between Experian and Finagraph, click here.

Gift card fraud Gift cards have risen in popularity over the last few years— National Retail Federation anticipated more than $31B in gift card sales during the 2014 holiday season alone. Gift cards are the most requested gift item, and they have been for eight years in a row. Total gift card sales for 2014 were anticipated to top $100 Billion. Gift cards are a practical gift – the purchaser can let the recipient pick exactly what they want, eliminating the worry of picking something that doesn’t fit right, that is a duplicate, or something that the recipient just might not want. They are also incredibly convenient, quick, and easy to purchase. The stigma behind gift cards is starting to fade, and it no longer seems as though they are an impersonal gifting option. Additionally, the type of gift cards available has expanded greatly in the last few years. If you are of the procrastinating nature, there are eGift Cards or eCertificates, which can be emailed in a matter of minutes to the recipient. If you are truly unsure what to purchase altogether, you can give an open-loop card, which are usually branded by Visa, MasterCard, and American Express, and can be used anywhere their logo appears. It also seems like a quick win for merchants to carry gift cards. The overhead cost to store them is extremely low because a small box of gift cards takes up very little space. When customers come in to redeem their GC, they usually spend more than the original value of the card itself, thus allowing for additional revenue capture. Something else that merchants have started doing in this big data world we live in is tying gift cards to consumer loyalty programs. Reloadable cards are now linked to a specific customer, who can also tie their credit card to the account, which is automatically charged once their account is below a pre-defined threshold. These new consumer loyalty accounts can be used to track spending history, tailor offers to the specific customer, and continue to expand on the immersive brand experience. Recently, a certain Mexican-themed fast food establishment launched their new mobile app; in the app, you could pre-order food, send and redeem eGCs, and find the nearest location. I don’t even eat at this establishment, but the innovation of their app was so enticing that I installed it the morning it came out, purchased an eGC for my husband, and pre-ordered breakfast. It was extremely easy and convenient, and I got a free taco! Now they have my soul. Okay, maybe not my soul, but they have my credit card data, purchasing preferences, device information, and location, which is almost the same thing at this point. After the experience I found myself asking why other merchants haven’t already done this or why it hasn’t taken off yet. This is a great example of how gift cards and emerging technology are being used as a marketing tool to entice consumers to build up a customer base. In the rare instance that a gift recipient does not actually find value in their gift card (the horror!) there’s a multitude of options for trading them in or redeeming for cash. Some well-known websites for trade-in are Giftcard Granny, Card Hub, and raise.com; it’s also incredibly common to find discounted GCs for sale on eBay, Craigslist, and Facebook groups. A couple familiar names that have recently entered into the mix are Wal-Mart and CoinStar. You can now exchange your physical gift card for cash at a specific CoinStar machines, and if you don’t feel like leaving your home, you can exchange your card online with Wal-Mart, and they will provide you with a Wal-Mart gift card that can be redeemed online or in stores. It’s such common practice that you can find articles on this topic on local, national, and 24-hour news websites. This tremendous revenue booster does not come free of risk, however. We know that fraudsters are clever and opportunistic. They will penetrate every weakness possible and take advantage of programs that are being used to enhance the consumer experience. But are they really stealing all these gift cards for personal gain and taking all of their friends out to their favorite local coffee shop for free drinks? Stay tuned for the second part of this blog that talks more about the fraud risks associated with gift cards and what you can do to mitigate them. Please note: *The use of GC/eGC is used interchangeably.

By: Mike Horrocks Experian has announced a new agreement with Finagraph, a best-in-class automated financial intelligence tool provider, to provide the banking industry with software to evaluate small business financials faster. Loan automation is key in pulling together data in a meaningful manner and this bank offering will provide consistent formatted financials for easier lending assessment. Finagraph’s automated financial intelligence tool delivers advanced analytics and data verification that presents small business financial information in a consistent format, making it easier for lenders to understand the commercial customer’s business. Experian’s portfolio risk management platform addresses the overall risks and opportunities within a loan portfolio. The company’s relationship lending platform provides a framework to automate, integrate and streamline commercial lending processes, including small and medium-sized enterprise and commercial lending. Both data-driven systems are designed to accommodate and integrate existing bank processes saving time which results in improved client engagement “Finagraph connects bankers and businesses in a data-driven way that leads to better insights that strengthens customer relationships,” said John Watts, Experian Decision Analytics director of product management. “Together we are helping our banking clients deliver the trusted advisor experience their business customers desire in a new industry-leading way.” “The lending landscape is rapidly changing. With new competitors entering the space, banks need innovative tools that allow them to maintain an advantage,” said James Walter, CEO and President of Finagraph. “We are excited about the way that our collaboration with Experian’s Baker Hill Advisor gives banks an edge by enabling them to connect with their clients in a meaningful way. Together we are hoping to empower a new generation of trusted advisors.” Learn more about our portfolio risk management and lending solutions and for more information on Finagraph please visit www.finagraph.com.

Deposit accounts for everyone Over the last several years, the Consumer Financial Protection Bureau (CFPB) has, not so quietly, been actively pushing for changes in how banks decision applicants for new checking accounts. Recent activity by the CFPB is accelerating the pace of this change for those managing deposits-gathering activities within regulated financial institutions. It is imperative banks begin adopting modern technology and product strategies that are designed for a digital age instead of an age before the internet even existed. In October 2014, the CFPB hosted the Forum On Access To Checking Accounts to push for more transparent account opening procedures, suggesting that bank’s use of “blacklists” that effectively “exclude” applicants from opening a transaction account are too opaque. Current regulatory trends are increasingly signaling the need for banks to bring checking account originations strategies into the 21st century as I indicated in Banking in the 21st Century. The operations and technology implications for banks must include modernizing the approach to account opening that goes beyond using different decision data to do “the same old thing” that only partially addresses broader concerns from consumers and regulators. Product features attached to check accounts, such as overdraft shadow limits, can be offered to consumers where this liquidity feature matches what the customer can afford. Banking innovation calls for deposit gatherers to find more ways to approve a basic transaction account, such as a checking account, that considers the consumer’s ability to repay and limit approving overdraft features for some checking accounts even if the consumer opts in. This doesn’t mean banks cannot use risk management principles in assessing which customers get that added liquidity management functionality attached to a checking account. It just means that overdraft should be one part of the total customer level exposure the bank considers in the risk assessment process. The looming regulatory impacts to overdraft fees, seemingly predictable, will further reduce bank revenue in an industry that has been hit hard over the last decade. Prudent financial institutions should begin managing the impact of additional lost fee revenue now and do it in a way that customers and regulators will appreciate. The CFPB has been signaling other looming changes for check account regulations, likely to accelerate throughout 2015, and portend further large impacts to bank overdraft revenue. Foreshadowing this change are the 2013 overdraft study by the CFPB and the proposed rules for prepaid cards published for commentary in December 2014 where prepaid account overdraft is “subject to rules governing credit cards under TILA, EFTA, and their implementing regulations”. That’s right, the CFPB has concluded overdraft for prepaid cards are the same as a loan falling under Reg Z. If the interpretation is applied to checking account debit card overdraft rules, it would effectively turn overdraft fees into finance charges and eliminate a huge portion of remaining profitability for banks from those fees. The good news for banks is that the solution for the new deposits paradigm is accomplished by bringing retail banking platforms into the 21st century that leverage the ability to set exposure for customers at the client level and apportioned to products or features such as overdraft. Proactively managing regulatory change, that is predictable and sure to come, includes banks considering the affordability of consumers and offering products that match the consumer’s needs and ability to repay. The risk decision is not different for unsecured lending in credit cards or for overdraft limits attached to a checking account. Banks becoming more innovative by offering checking accounts enabling consumers more flexible and transparent liquidity management functionality at a reasonable price will differentiate themselves in the market place and with regulatory bodies such as the CFPB. Conducting a capabilities assessment, or business review, to assess product innovation options like combining digital lines of credit with check accounts, will inform your business what you should do to maintain customer profitability. I recommend three steps to begin the change process and proactively manage through the deposit industry regulatory changes that lay ahead: First, assess the impacts of potential lost fees if current overdraft fees are further limited or eliminated and quantify what that means to your product profitability. Second, begin designing alternative pricing strategies, product offerings and underwriting strategies that allow you to set total exposure at a client level and apportion this exposure across lending products that includes overdraft lines and is done in a way that it is transparent to your customers and aligns to what they can afford. Third, but can be done in parallel with steps one and two, begin capability assessments of your financial institution’s core bank decision platform that is used to open and manage customer accounts to ensure your technology is prepared to handle future mandatory regulatory requirements without driving all your customers to your competitors. It is a given that change is inevitable. Deposit organizations are well served to manage this current shift in regulatory policy related to checking account acquisitions in a way consistent with guaranteeing your bank’s competitive advantage. Banks can stay out front of competitors by offering transparent and relevant financial products consumers will be drawn to buy and can’t afford to live without! Thank you for following my blog and insights in DDA best practices. Please accept my invitation to participate in a short market study. Click here to participate. Participants in this 5 minute survey will receive a copy of the results as a token of appreciation.