Apply DA Tag

As we step into 2025, the convergence of credit and fraud risk has become more pronounced than ever. With fraudsters leveraging emerging technologies and adapting rapidly to new defenses, risk managers need to adopt forward-thinking strategies to protect their organizations and customers. Here are the top fraud trends and actionable resolutions to help you stay ahead of the curve this year. 1. Combat synthetic identity fraud with advanced AI models The trend: Synthetic identity fraud is surging, fueled by data breaches and advanced AI tooling. Fraudsters are combining genuine credentials with fabricated details, creating identities that evade traditional detection methods. Resolution: Invest in sophisticated identity validation tools that leverage advanced AI models. These tools can differentiate between legitimate and fraudulent identities, ensuring faster and more accurate creditworthiness assessments. Focus on integrating these solutions seamlessly into your customer onboarding process to enhance both security and user experience. 2. Strengthen authentication against deepfakes The trend: Deepfake technology is putting immense pressure on existing authentication systems, particularly in high-value transactions and account takeovers. Resolution: Adopt a multilayered authentication strategy that combines voice and facial biometrics with ongoing transaction monitoring. Dynamic authentication methods that evolve based on user behavior and fraud patterns can effectively counter these advanced threats. Invest in solutions that ensure digital interactions remain secure without compromising convenience. 3. Enhance detection of payment scams and APP fraud The trend: Authorized Push Payment (APP) fraud and scams are increasingly difficult to detect because they exploit legitimate customer behaviors. Resolution: Collaborate with industry peers and explore centralized consortia to share insights and develop robust detection strategies. Focus on monitoring both inbound and outbound transactions to identify anomalies, particularly payments to mule accounts. 4. Optimize Your Fraud Stack for Efficiency and Effectiveness The trend: Outdated device and network solutions are no match for GenAI-enhanced fraud tactics. Resolution: Deploy a layered fraud stack with persistent device ID technology, behavioral analytics, and GenAI-driven anomaly detection. Begin with frictionless first-tier tools to filter out low-hanging fraud vectors, reserving more advanced and costly tools for sophisticated threats. Regularly review and refine your stack to ensure it adapts to evolving fraud patterns. 5. Build collaborative relationships with fraud solution vendors The trend: Vendors offer unparalleled industry insights and long-tail data to help organizations prepare for emerging fraud trends. Resolution: Engage in reciprocal knowledge-sharing with your vendors. Leverage advisory boards and industry insights to stay informed about the latest attack vectors. Choose vendors who provide transparency and are invested in your fraud mitigation goals, turning product relationships into strategic partnerships. Turning resolutions into reality Fraudsters are becoming more ingenious, leveraging GenAI and other technologies to exploit vulnerabilities. To stay ahead of fraud in 2025, let us make fraud prevention not just a resolution but a commitment to safeguarding trust and security in a rapidly evolving landscape. Learn more

Bots have been a consistent thorn in fraud teams’ side for years. But since the advent of generative AI (genAI), what used to be just one more fraud type has become a fraud tsunami. This surge in fraud bot attacks has brought with it: A 108% year-over-year increase in credential stuffing to take over accounts1 A 134% year-over-year increase in carding attacks, where stolen cards are tested1 New account opening fraud at more than 25% of businesses in the first quarter of 2024 While fraud professionals rush to fight back the onslaught, they’re also reckoning with the ever-evolving threat of genAI. A large factor in fraud bots’ new scalability and strength, genAI was the #1 stress point identified by fraud teams in 2024, and 70% expect it to be a challenge moving forward, according to Experian’s U.S. Identity and Fraud Report. This fear is well-founded. Fraudsters are wasting no time incorporating genAI into their attack arsenal. GenAI has created a new generation of fraud bot tools that make bot development more accessible and sophisticated. These bots reverse-engineer fraud stacks, testing the limits of their targets’ defenses to find triggers for step-ups and checks, then adapt to avoid setting them off. How do bot detection solutions fare against this next generation of bots? The evolution of fraud bots The earliest fraud bots, which first appeared in the 1990s2 , were simple scripts with limited capabilities. Fraudsters soon began using these scripts to execute basic tasks on their behalf — mainly form spam and light data scraping. Fraud teams responded, implementing bot detection solutions that continued to evolve as the threats became more sophisticated. The evolution of fraud bots was steady — and mostly balanced against fraud-fighting tools — until genAI supercharged it. Today, fraudsters are leveraging genAI’s core ability (analyzing datasets and identifying patterns, then using those patterns to generate solutions) to create bots capable of large-scale attacks with unprecedented sophistication. These genAI-powered fraud bots can analyze onboarding flows to identify step-up triggers, automate attacks at high-volume times, and even conduct “behavior hijacking,” where bots record and replicate the behaviors of real users. How next-generation fraud bots beat fraud stacks For years, a tried-and-true tool for fraud bot detection was to look for the non-human giveaways: lightning-fast transition speeds, eerily consistent keystrokes, nonexistent mouse movements, and/or repeated device and network data were all tell-tale signs of a bot. Fraud teams could base their bot detection strategies off of these behavioral red flags. Stopping today’s next-generation fraud bots isn’t quite as straightforward. Because they were specifically built to mimic human behavior and cycle through device IDs and IP addresses, today’s bots often appear to be normal, human applicants and circumvent many of the barriers that blocked their predecessors. The data the bots are providing is better, too3, fraudsters are using genAI to streamline and scale the creation of synthetic identities.4 By equipping their human-like bots with a bank of high-quality synthetic identities, fraudsters have their most potent, advanced attack avenue to date. Skirting traditional bot detection with their human-like capabilities, next-generation fraud bots can bombard their targets with massive, often undetected, attacks. In one attack analyzed by NeuroID, a part of Experian, fraud bots made up 31% of a business's onboarding volume on a single day. That’s nearly one-third of the business’s volume comprised of bots attempting to commit fraud. If the business hadn’t had the right tools in place to separate these bots from genuine users, they wouldn’t have been able to stop the attack until it was too late. Beating fraud bots with behavioral analytics: The next-generation approach Next-generation fraud bots pose a unique threat to digital businesses: their data appears legitimate, and they look like a human when they’re interacting with a form. So how do fraud teams differentiate fraud bots from an actual human user? NeuroID’s product development teams discovered key nuances that separate next-generation bots from humans, and we’ve updated our industry-leading bot detection capabilities to account for them. A big one is mousing patterns: random, erratic cursor movements are part of what makes next-generation bots so eerily human-like, but their movements are still noticeably smoother than a real human’s. Other bot detection solutions (including our V1 signal) wouldn’t flag these advanced cursor movements as bot behavior, but our new signal is designed to identify even the most granular giveaways of a next-generation fraud bot. Fraud bots will continue to evolve. But so will we. For example, behavioral analytics can identify repeated actions — down to the pixel a cursor lands on — during a bot attack and block out users exhibiting those behaviors. Our behavior was built specifically to combat next-gen challenges with scalable, real-time solutions. This proactive protection against advanced bot behaviors is crucial to preventing larger attacks. For more on fraud bots’ evolution, download our Emerging Trends in Fraud: Understanding and Combating Next-Gen Bots report. Learn more Sources 1 HUMAN Enterprise Bot Fraud Benchmark Report 2 Abusix 3 NeuroID 4 Biometric Update

Protecting consumer information is paramount in today’s digital age, especially for financial institutions. With cyber threats on the rise, robust user authentication methods are essential to safeguard sensitive data. This guide will walk you through the various user authentication types and methods, focusing on solutions that can help financial institutions enhance their security measures and protect consumers’ personal information. Understanding user authentication types Single-factor authentication (SFA) Single-factor authentication is the most basic form of authentication, requiring only one piece of information, such as a password. While it's easy to implement, SFA has significant drawbacks, particularly in the financial sector where security is critical. Passwords can be easily compromised through phishing or brute force attacks, making SFA insufficient on its own. Two-factor authentication (2FA) Two-factor authentication uses two different factors to verify a user's identity. For example, a bank might require a consumer to enter their password and then confirm their identity with a code sent to their mobile device. This method enhances security without overcomplicating the user experience. Multi-factor authentication (MFA) Multi-factor authentication adds an extra layer of security by requiring two or more verification factors. These factors typically include something you know (a password), something you have (a token or smartphone), and something you can present with your body, such as a fingerprint or facial scan (biometric data). MFA significantly reduces the risk of unauthorized access, making it a crucial component for financial institutions. Common authentication methods Password-based authentication Passwords are the most common form of authentication. However, they come with challenges, especially in the financial sector. Weak or reused passwords can be easily exploited. Financial institutions should enforce strong password policies and educate consumers on creating secure passwords. Biometric authentication Biometric authentication uses unique biological characteristics, such as fingerprints, facial recognition, or iris scans to verify identity. This method is becoming increasingly popular in banking due to its convenience and high level of security. However, a potential drawback is that it also raises privacy concerns. Token-based authentication Token-based authentication involves the use of physical or software tokens. Physical tokens, like smart cards, generate a one-time code for login. Software tokens, such as mobile apps, provide similar functionality. This method is highly secure and is often used in financial transactions. Certificate-based authentication Certificate-based authentication uses digital certificates to establish a secure connection. This method is commonly used in secure communications within financial systems. While it offers robust security, implementing and managing digital certificates can be complex. Two-factor authentication (2FA) solutions 2FA is a practical and effective way to enhance security. Popular methods include SMS-based codes, app-based authentication, and email-based verification. Each method has its pros and cons, but all provide an additional layer of security that is vital for protecting financial data. Many financial institutions have successfully implemented two factor authentication solutions. For example, a bank might use SMS-based 2FA to verify transactions, significantly reducing fraud. Another institution might adopt app-based 2FA, offering consumers a more secure and convenient way to authenticate their identity. Multi-factor authentication (MFA) solutions MFA is essential for financial institutions aiming to enhance security. Multifactor authentication solutions can provide multiple layers of protection and ensure that even if one factor is compromised, unauthorized access is still prevented. Implementing MFA requires careful planning. Financial institutions should start by assessing their current security measures and identifying areas for improvement. It's crucial to choose MFA solutions that integrate seamlessly with existing systems. Training staff and educating consumers on the importance of MFA can also help ensure a smooth transition. Knowledge-based authentication (KBA) solutions What is KBA? Knowledge-based authentication relies on information that only the user should know, such as answers to security questions. There are two types: static KBA, which uses pre-set questions, and dynamic KBA, which generates questions based on the user's transaction history or other data. Effectiveness of KBA While KBA can be effective, it has its limitations. Static KBA is vulnerable to social engineering attacks, where fraudsters gather information about the user to answer security questions. Dynamic KBA offers more security but can be more complex to implement. Financial institutions should weigh the pros and cons of KBA and consider combining it with other methods for enhanced security. Enhancing KBA security To improve KBA security, financial institutions can combine it with other user authentication types, such as MFA or 2FA. This layered approach ensures that even if one method is compromised, additional layers of security are in place. Best practices for knowledge based authentication solutions include regularly updating security questions and using questions that are difficult for others to guess. Using authentication methods to protect consumer information Choosing the right authentication methods is crucial for financial institutions to protect consumer information and maintain trust. By understanding and implementing robust authentication solutions like MFA, 2FA, and KBA, banks and financial services can significantly enhance their security posture. As cyber threats continue to evolve, staying ahead with advanced authentication methods will be key to safeguarding sensitive data and ensuring consumer confidence. Experian’s multifactor authentication solutions can enhance your existing authentication process while reducing friction, using risk-assessment tools to apply the appropriate level of security. Learn how your organization can provide faster, more agile mobile transactions, risk protection for your business, and security and peace of mind for your consumers. Visit our website to learn more This article includes content created by an AI language model and is intended to provide general information.

Dormant fraud, sleeper fraud, trojan horse fraud . . . whatever you call it, it’s an especially insidious form of account takeover fraud (ATO) that fraud teams often can’t detect until it’s too late. Fraudsters create accounts with stolen credentials or gain access to existing ones, onboard under the fake identity, then lie low, waiting for an opportunity to attack. It takes a strategic approach to defeat the enemy from within, and fraudsters assume you won’t have the tools in place to even know where to start. Dormant fraud uncovered: A case study NeuroID, a part of Experian, has seen the dangers of dormant fraud play out in real time. As a new customer to NeuroID, this payment processor wanted to backtest their user base for potential signs of fraud. Upon analyzing their customer base’s onboarding behavioral data, we discovered more than 100K accounts were likely to be dormant fraud. The payment processor hadn’t considered these accounts suspicious and didn’t see any risk in letting them remain active, despite the fact that none of them had completed a transaction since onboarding. Why did we flag these as risky? Low familiarity: Our testing revealed behavioral red flags, such as copying and pasting into fields or constant tab switching. These are high indicators that the applicant is applying with personally identifiable information (PII) that isn’t their own. Fraud clusters: Many of these accounts used the same web browser, device, and IP address during sign-up, suggesting that one fraudster was signing up for multiple accounts. We found hundreds of clusters like these, many with 50 or more accounts belonging to the same device and IP address within our customer’s user base. It was clear that this payment processor’s fraud stack had gaps that left them vulnerable. These dormant accounts could have caused significant damage once mobilized: receiving or transferring stolen funds, misrepresenting their financial position, or building toward a bust-out. Dormant fraud thrives in the shadows beyond onboarding. These fraudsters keep accounts “dormant” until they’re long past onboarding detection measures. And once they’re in, they can often easily transition to a higher-risk account — after all, they’ve already confirmed they’re trustworthy. This type of attack can involve fraudulent accounts remaining inactive for months, allowing them to bypass standard fraud detection methods that focus on immediate indicators. Dormant fraud gets even more dangerous when a hijacked account has built trust just by existing. For example, some banks provide a higher credit line just for current customers, no matter their activities to date. The more accounts an identity has in good standing, the greater the chance that they’ll be mistaken for a good customer and given even more opportunities to commit higher-level fraud. This is why we often talk to our customers about the idea of progressive onboarding as a way to overcome both dormant fraud risks and the onboarding friction caused by asking for too much information, too soon. Progressive onboarding, dormant fraud, and the friction balance Progressive onboarding shifts from the one-size-fits-all model by gathering only truly essential information initially and asking for more as customers engage more. This is a direct counterbalance to the approach that sometimes turns customers off by asking for too much too soon, and adding too much friction at initial onboarding. It also helps ensure ongoing checks that fight dormant fraud. We’ve seen this approach (already growing popular in payment processing) be especially useful in every type of financial business. Here’s how it works: A prospect visits your site to explore options. They may just want to understand fees and get a feel for your offerings. At this stage, you might ask for minimal information — just a name and email — without requiring a full fraud check or credit score. It’s a low commitment ask that keeps things simple for casual prospects who are just browsing, while also keeping your costs low so you don’t spend a full fraud check on an uncommitted visitor. As the prospect becomes a true customer and begins making small transactions, say a $50 transfer, you request additional details like their date of birth, physical address, or phone number. This minor step-up in information allows for a basic behavioral analytics fraud check while maintaining a low barrier of time and PII-requested for a low-risk activity. With each new level of engagement and transaction value, the information requested increases accordingly. If the customer wants to transfer larger amounts, like $5,000, they’ll understand the need to provide more details — it aligns with the idea of a privacy trade-off, where the customer’s willingness to share information grows as their trust and need for services increase. Meanwhile, your business allocates resources to those who are fully engaged, rather than to one-time visitors or casual sign-ups, and keeps an eye on dormant fraudsters who might have expected no barrier to additional transactions. Progressive onboarding is not just an effective approach for dormant fraud and onboarding friction, but also in fighting fraudsters who sneak in through unseen gaps. In another case, we worked with a consumer finance platform to help identify gaps in their fraud stack. In one attack, fraudsters probed until they found the product with the easiest barrier of entry: once inside they went on to immediately commit a full-force bot attack on higher value returns. The attack wasn’t based on dormancy, but on complacency. The fraudsters assumed this consumer finance platform wouldn’t realize that a low controls onboarding for one solution could lead to ease of access to much more. And they were right. After closing that vulnerability, we helped this customer work to create progressive onboarding that includes behavior-based fraud controls for every single user, including those already with accounts, who had built that assumed trust, and for low-risk entry-points. This weeded out any dormant fraudsters already onboarded who were trying to take advantage of that trust, as they had to go through behavioral analytics and other new controls based on the risk-level of the product. Behavioral analytics gives you confidence that every customer is trustworthy, from the moment they enter the front door to even after they’ve kicked off their shoes to stay a while. Behavioral analytics shines a light on shadowy corners Behavioral analytics are proven beyond just onboarding — within any part of a user interaction, our signals detect low familiarity, high-risk behavior and likely fraud clusters. In our experience, building a progressive onboarding approach with just these two signal points alone would provide significant results — and would help stop sophisticated fraudsters from perpetrating dormant fraud, including large-scale bust outs. Want to find out how progressive onboarding might work for you? Contact us for a free demo and deep dive into how behavioral analytics can help throughout your user journey. Contact us for a free demo

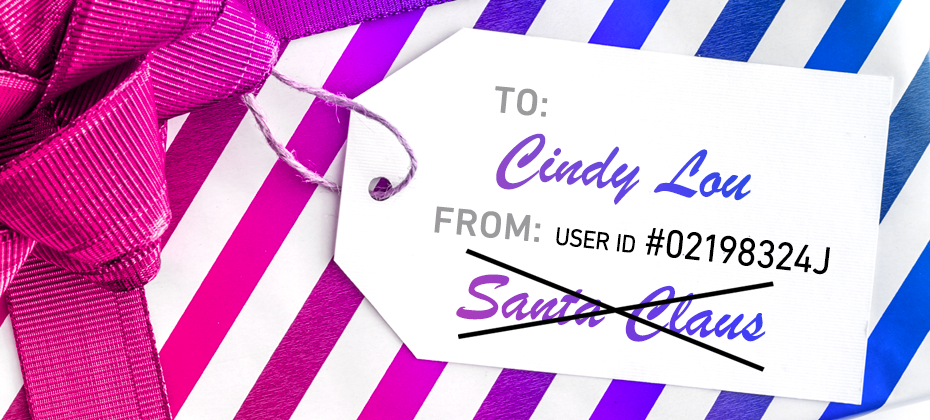

A tale of synthetic ID fraud Synthetic ID fraud is an increasing issue and affects everyone, including high-profile individuals. A notable case from Ohio involved Warren Hayes, who managed to get an official ID card in the name of “Santa Claus” from the Ohio Bureau of Motor Vehicles. He also registered a vehicle, opened a bank account, and secured an AAA membership under this name, listing his address as 1 Noel Drive, North Pole, USA. This elaborate ruse unraveled after Hayes, disguised as Santa, got into a minor car accident. When the police requested identification, Hayes presented his Santa Claus ID. He was subsequently charged under an Ohio law prohibiting the use of fictitious names. However, the court—presided over by Judge Thomas Gysegem—dismissed the charge, arguing that because Hayes had used the ID for over 20 years, "Santa Claus" was effectively a "real person" in the eyes of the law. The judge’s ruling raised eyebrows and left one glaring question unanswered: how could official documents in such a blatantly fictitious name go undetected for two decades? From Santa Claus to synthetic IDs: the modern-day threat The Hayes case might sound like a holiday comedy, but it highlights a significant issue that organizations face today: synthetic identity fraud. Unlike traditional identity theft, synthetic ID fraud does not rely on stealing an existing identity. Instead, fraudsters combine real and fictitious details to create a new “person.” Think of it as an elaborate game of make-believe, where the stakes are millions of dollars. These synthetic identities can remain under the radar for years, building credit profiles, obtaining loans, and committing large-scale fraud before detection. Just as Hayes tricked the Bureau of Motor Vehicles, fraudsters exploit weak verification processes to pass as legitimate individuals. According to KPMG, synthetic identity fraud bears a staggering $6 billion cost to banks.To perpetrate the crime, malicious actors leverage a combination of real and fake information to fabricate a synthetic identity, also known as a “Frankenstein ID.” The financial industry classifies various types of synthetic identity fraud. Manipulated Synthetics – A real person’s data is modified to create variations of that identity. Frankenstein Synthetics – The data represents a combination of multiple real people. Manufactured Synthetics – The identity is completely synthetic. How organizations can combat synthetic ID fraud A multifaceted approach to detecting synthetic identities that integrates advanced technologies can form the foundation of a sound fraud prevention strategy: Advanced identity verification tools: Use AI-powered tools that cross-check identity attributes across multiple data points to flag inconsistencies. Behavioral analytics: Monitor user behaviors to detect anomalies that may indicate synthetic identities. For instance, a newly created account applying for a large loan with perfect credit is a red flag. Digital identity verification: Implement digital onboarding processes that include online identity verification with real-time document verification. Users can upload government-issued IDs and take selfies to confirm their identity. Collaboration and data sharing: Organizations can share insights about suspected synthetic identities to prevent fraudsters from exploiting gaps between industries. Ongoing employee training: Ensure frontline staff can identify suspicious applications and escalate potential fraud cases. Regulatory support: Governments and regulators can help by standardizing ID issuance processes and requiring more stringent checks. Closing thoughts The tale of Santa Claus’ stolen identity may be entertaining, but it underscores the need for vigilance against synthetic ID fraud. As we move into an increasingly digital age, organizations must stay ahead of fraudsters by leveraging technology, training, and collaboration. Because while the idea of Spiderman or Catwoman walking into your branch may seem amusing, the financial and reputational cost of synthetic ID fraud is no laughing matter. Learn more

Despite being a decades-old technology, behavioral analytics is often still misunderstood. We’ve heard from fraud, identity, security, product, and risk professionals that exploring a behavior-based fraud solution brings up big questions, such as: What does behavioral analytics provide that I don’t get now? (Quick answer: a whole new signal and an earlier view of fraud) Why do I need to add even more data to my fraud stack? (Quick answer: it acts with your stack to add insights, not overload) How is this different from biometrics? (Quick answer: while biometrics track characteristics, behavioral analytics tracks distinct actions) These questions make sense — stopping fraud is complex, and, of course, you want to do your research to fully understand what ROI any tool will add. NeuroID, now part of Experian, is one of the only behavioral analytics-first businesses built specifically for stopping fraud. Our internal experts have been crafting behavioral-first solutions to detect everything from simple script fraud bots through to generative AI (genAI) attacks. We know how behavioral analytics works best within your fraud stack, and how to think strategically about using it to stop fraud rings, bot fraud, and other third-party fraud attacks. This primer will provide answers to the biggest questions we hear, so you can make the most informed decisions when exploring how our behavioral analytics solutions could work for you. Q1. What is behavioral analytics and how is it different from behavioral biometrics? A common mistake is to conflate behavioral analytics with behavioral biometrics. But biometrics rely on unique physical characteristics — like fingerprints or facial scans — used for automated recognition, such as unlocking your phone with Face ID. Biometrics connect a person’s data to their identity. But behavioral analytics? They don’t look at an identity. They look at behavior and predict risk. While biometrics track who a person is, behavioral analytics track what they do. For example, NeuroID’s behavioral analytics observes every time someone clicks in a box, edits a field, or hovers over a section. So, when a user’s actions suggest fraudulent intent, they can be directed to additional verification steps or fully denied. And if their actions suggest trustworthiness? They can be fast-tracked. Or, as a customer of ours put it: "Using NeuroID decisioning, we can confidently reject bad actors today who we used to take to step-up. We also have enough information on good applicants sooner, so we can fast-track them and say ‘go ahead and get your loan, we don’t need anything else from you.’ And customers really love that." - Mauro Jacome, Head of Data Science for Addi (read the full Addi case study here). The difference might seem subtle, but it’s important. New laws on biometrics have triggered profound implications for banks, businesses, and fraud prevention strategies. The laws introduce potential legal liabilities, increased compliance costs, and are part of a growing public backlash over privacy concerns. Behavioral signals, because they don’t tie behavior to identity, are often easier to introduce and don’t need the same level of regulatory scrutiny. The bottom line is that our behavioral analytics capabilities are unique from any other part of your fraud stack, full-stop. And it's because we don’t identify users, we identify intentions. Simply by tracking users’ behavior on your digital form, behavioral analytics powered by NeuroID tells you if a user is human or a bot; trustworthy or risky. It looks at each click, edit, keystroke, pause, and other tiny interactions to measure every users’ intention. By combining behavior with device and network intelligence, our solutions provide new visibility into fraudsters hiding behind perfect PII and suspicious devices. The result is reduced fraud costs, fewer API calls, and top-of-the-funnel fraud capture with no tuning or model integration on day one. With behavioral analytics, our customers can detect fraud attacks in minutes, instead of days. Our solutions have proven results of detecting up to 90% of fraud with 99% accuracy (or <1% false positive rate) with less than 3% of your population getting flagged. Q2. What does behavioral analytics provide that I don’t get now? Behavioral analytics provides a net-new signal that you can’t get from any other tools. One of our customers, Josh Eurom, Manager of Fraud for Aspiration Banking, described it this way: “You can quantify some things very easily: if bad domains are coming through you can identify and stop it. But if you see things look odd, yet you can’t set up controls, that’s where NeuroID behavioral analytics come in and captures the unseen fraud.” (read the full Aspiration story here) Adding yet another new technology with big promises may not feel urgent. But with genAI fueling synthetic identity fraud, next-gen fraud bots, and hyper-efficient fraud ring attacks, time is running out to modernize your stack. In addition, many fraud prevention tools today only focus on what PII is submitted — and PII is notoriously easy to fake. Only behavioral analytics looks at how the data is submitted. Behavioral analytics is a crucial signal for detecting even the most modern fraud techniques. Watch our webinar: The Fraud Bot Future-Shock: How to Spot and Stop Next-Gen Attacks Q3. Why do I need to add even more data to my fraud stack? Balancing fraud, friction, and financial impact has led to increasingly complex fraud stacks that often slow conversions and limit visibility. As fraudsters evolve, gaps grow between how quickly you can keep up with their new technology. Fraudsters have no budget constraints, compliance requirements, or approval processes holding them back from implementing new technology to attack your stack, so they have an inherent advantage. Many fraud teams we hear from are looking for ways to optimize their workflows without adding to the data noise, while balancing all the factors that a fraud stack influences beyond overall security (such as false positives and unnecessary friction). Behavioral analytics is a great way to work smarter with what you have. The signals add no friction to the onboarding process, are undetectable to your customers, and live on a pre-submit level, using data that is already captured by your existing application process. Without requiring any new inputs from your users or stepping into messy biometric legal gray areas, behavioral analytics aggregates, sorts, and reviews a broad range of cross-channel, historical, and current customer behaviors to develop clear, real-time portraits of transactional risks. By sitting top-of-funnel, behavioral analytics not only doesn’t add to the data noise, it actually clarifies the data you currently rely on by taking pressure off of your other tools. With these insights, you can make better fraud decisions, faster. Or, as Eurom put it: “Before NeuroID, we were not automatically denying applications. They were getting an IDV check and going into a manual review. But with NeuroID at the top of our funnel, we implemented automatic denial based on the risky signal, saving us additional API calls and reviews. And we’re capturing roughly four times more fraud. Having behavioral data to reinforce our decision-making is a relief.” The behavioral analytics difference Since the world has moved online, we’re missing the body language clues that used to tell us if someone was a fraudster. Behavioral analytics provides the digital body language differentiator. Behavioral cues — such as typing speed, hesitation, and mouse movements — highlight riskiness. The cause of that risk could be bots, stolen information, fraud rings, synthetic identities, or any combination of third-party fraud attack strategies. Behavioral analytics gives you insights to distinguish between genuine applicants and potentially fraudulent ones without disrupting your customer’s journey. By interpreting behavioral patterns at the very top of the onboarding funnel, behavior helps you proactively mitigate fraud, reduce false positives, and streamline onboarding, so you can lock out fraudsters and let in legitimate users. This is all from data you already capture, simply tracking interactions on your site. Stop fraud, faster: 5 simple uses where behavioral analytics shine While how you approach a behavioral analytics integration will vary based on numerous factors, here are some of the immediate, common use cases of behavioral analytics. Detecting fraud bots and fraud rings Behavioral analytics can identify fraud bots by their frameworks, such as Puppeter or Stealth, and through their behavioral patterns, so you can protect against even the most sophisticated fourth-generation bots. NeuroID provides holistic coverage for bot and fraud ring detection — passively and with no customer friction, often eliminating the need for CAPTCHA and reCAPTCHA. With this data alone, you could potentially blacklist suspected fraud bot and fraud ring attacks at the top of the fraud prevention funnel, avoiding extra API calls. Sussing out scams and coercions When users make account changes or transactions under coercion, they often show unfamiliarity with the destination account or shipping address entered. Our real-time assessment detects these risk indicators, including hesitancy, multiple corrections, and slow typing, alerting you in real-time to look closer. Stopping use of compromised cards and stolen IDs Traditional PII methods can fall short against today’s sophisticated synthetic identity fraud. Behavioral analytics uncovers synthetic identities by evaluating how PII is entered, instead of relying on PII itself (which is often corrupted). For example, our behavioral signals can assess users’ familiarity with the billing address they’re entering for a credit card or bank account. Genuine account holders will show strong familiarity, while signs of unfamiliarity are indicators of an account under attack. Detecting money mules Our behavioral analytics solutions track how familiar users are with the addresses they enter, conducting a real-time, sub-millisecond familiarity assessment. Risk markers such as hesitancy, multiple corrections, slow typing speed raise flags for further exploration. Stopping promotion and discount abuse Our behavioral analytics identifies risky versus trustworthy users in promo and discount fields. By assessing behavior, device, and network risk, we help you determine if your promotions attract more risky than trustworthy users, preventing fraudsters from abusing discounts. Learn more about our behavioral analytics solutions. Learn more Watch webinar

As online accounts become essential for activities ranging from shopping and social media to banking, "account farming" has emerged as a significant fraud risk. This practice involves creating fake or unauthorized accounts en masse, often for malicious purposes. Understanding how account farming works, why it’s done and how businesses can protect themselves is crucial for maintaining data integrity, safeguarding customer trust and protecting your bottom line. How does account farming work? Account farming is the process of creating and cultivating multiple user accounts, often using fake or stolen identities. These accounts may look like legitimate users, but they’re controlled by a single entity or organization, usually with fraudulent intent. Here’s a breakdown of the typical steps involved in account farming: Identity generation: Account farmers start by obtaining either fake or stolen personal information. They may buy these datasets on the dark web or scrape publicly available information to make each account seem legitimate. Account creation: Using bots or manual processes, fraudsters create numerous accounts on a platform. Often, they’ll employ automated tools to expedite this process, bypassing CAPTCHA or reCAPTCHA systems or using proxy servers to mask their IP addresses and avoid detection. Warm-up phase: After initial creation, account farmers often let the accounts sit for a while, engaging in limited, non-suspicious activity to avoid triggering security alerts. This “warming up” process helps the accounts seem more authentic. Activation for fraudulent activity: Once these accounts reach a level of credibility, they’re activated for the intended purpose. This might include spamming, fraud, phishing, fake reviews or promotional manipulation. Why is account farming done? There are several reasons account farming has become a widespread problem across different industries. Here are some common motivations: Monetary gain: Fraudsters use farmed accounts to commit fraudulent transactions, like applying for loans and credit products, accessing promotional incentives or exploiting referral programs. Spam and phishing: Fake accounts enable widespread spam campaigns or phishing attacks, compromising customer data and damaging brand reputation. Data theft: By creating and controlling multiple accounts, fraudsters may access sensitive data, leading to further exploitation or resale on the dark web. Manipulating metrics and market perception: Some industries use account farming to boost visibility and credibility falsely. For example, on social media, fake accounts can be used to inflate follower counts or engagement metrics. In e-commerce, fraudsters may create fake accounts to leave fake reviews or upvote products, falsely boosting perceived popularity and manipulating purchasing decisions. How does account farming lead to fraud risks? Account farming is a serious problem that can expose businesses and their customers to a variety of risks: Financial loss: Fake accounts created to exploit promotional offers or referral programs can cause victims to experience significant financial losses. Additionally, businesses can incur costs from chargebacks or fraudulent refunds triggered by these accounts. Compromised customer experience: Legitimate customers may suffer from poor experiences, such as spam messages, unsolicited emails or fraudulent interactions. This leads to diminished brand trust, which is costly to regain. Data breaches and compliance risks: Account farming often relies on stolen data, increasing the risk of data breaches. Businesses subject to regulations like GDPR or CCPA may face hefty fines if they fail to protect consumer information adequately. READ MORE: Our Data Breach Industry Forecast predicts what’s in store for the coming year. How can businesses protect themselves from account farming fraud? As account farming tactics evolve, businesses need a proactive and sophisticated approach to detect and prevent these fraudulent activities. Experian’s fraud risk management solutions provide multilayered and customizable solutions to help companies safeguard themselves against account farming and other types of fraud. Here’s how we can help: Identity verification solutions: Experian’s fraud risk and identity verification platform integrates multiple verification methods to confirm the authenticity of user identities. Through real-time data validation, businesses can verify the legitimacy of user information provided at the account creation stage, detecting and blocking fake identities early in the process. Its flexible architecture allows companies to adapt their identity verification process as new fraud patterns emerge, helping them stay one step ahead of account farmers. Behavioral analytics: One effective way to identify account farming is to analyze user behavior for patterns consistent with automated or scripted actions (AKA “bots”). Experian’s behavioral analytics solutions, powered by NeuroID, use advanced machine learning algorithms to identify unusual behavioral trends among accounts. By monitoring how users interact with a platform, we can detect patterns common in farmed accounts, like uniform interactions or repetitive actions that don’t align with human behavior. Device intelligence: To prevent account farming fraud, it’s essential to go beyond user data and examine the devices used to create and access accounts. Experian’s solutions combine device intelligence with identity verification to flag suspicious devices associated with multiple accounts. For example, account farmers often use virtual machines, proxies or emulators to create accounts without revealing their actual location or device details. By identifying and flagging these high-risk devices, we help prevent fraudulent accounts from slipping through the cracks. Velocity checks: Velocity checks are another way to block fraudulent account creation. By monitoring the frequency and speed at which new accounts are created from specific IP addresses or devices, Experian’s fraud prevention solutions can identify spikes indicative of account farming. These velocity checks work in real-time, enabling businesses to act immediately to block suspicious activity and minimize the risk of fake account creation. Continuous monitoring and risk scoring: Even after initial account creation, continuous monitoring of user activity helps to identify accounts that may have initially bypassed detection but later engage in suspicious behavior. Experian’s risk scoring system assigns a fraud risk score to each account based on its behavior over time, alerting businesses to potential threats before they escalate. Final thoughts: Staying ahead of account farming fraud Preventing account farming is about more than just blocking bots — it’s about safeguarding your business and its customers against fraud risk. By understanding the mechanics of account farming and using a multi-layered approach to fraud detection and identity verification, businesses can protect themselves effectively. Ready to take a proactive stance against account farming and other evolving fraud tactics? Explore our comprehensive solutions today. Learn More This article includes content created by an AI language model and is intended to provide general information.

For businesses across all sectors solutions that improve productivity are more important than ever. As technology advances, organizations across industries are looking to capitalize by investing in artificial intelligence (AI) solutions. Studies have recently shown that productivity is a leading measure of how well these AI tools are performing. About 60% of organizations surveyed are using “improved productivity” as a metric to measure the success of implementing AI solutions.[1] Experian research shows it takes an average of 15 months to build a model and put it into production. This can hinder productivity and the ability to quickly go to market. Without a deep understanding of key data points, organizations may also have difficulty realizing time to value efficiently. To improve upon the modeling lifecycle, businesses must examine the challenges involved in the process. The challenges of model building One of the most significant challenges of the modeling lifecycle is speed. Slow modeling processes can cause delays and missed opportunities for businesses which they may have otherwise capitalized on. Another difficulty organizations face is having limited access to high-quality data to build more efficient models. Without the right data, businesses can miss out on actionable insights that could give them a competitive edge. In addition, when organizations have inefficient resources, expenses can skyrocket due to the need for experts to intervene and address ongoing issues. This can result in a steep learning curve as new tools and platforms are adopted, making it difficult for organizations to operate efficiently without outside help. Businesses can combat these challenges by implementing tools such as artificial intelligence (AI) to drive efficiency and productivity. The AI journey While generative AI and large language models are becoming more prevalent in everyday life, the path to incorporating a fully functional AI tool into an organization’s business operations involves multiple steps. Beginning with a proof of concept, many organizations start their AI journey with building ideas and use cases, experimentation, and identifying and mitigating potential pitfalls, such as inaccurate or irrelevant information. Once a proof of concept reaches an acceptable state of validity, organizations can move on to production and value at scale. During this phase, organizations will select specific use cases to move into production and measure their performance. Analyzing the results can help businesses glean valuable information about which techniques work most effectively, so they can apply those techniques to new use cases. Following successful iterations of an efficiently functioning AI, the organization can then implement that AI as a part of their business by working the technology into everyday operations. This can help organizations drive productivity at scale across various business processes. Experian’s AI journey has been ongoing, with years of expertise in implementing AI into various products and services. With a goal of providing greater insights to both businesses and consumers while adhering to proper consumer data privacy and compliance, Experian is committed to responsibly using AI to combat fraud and foster greater financial access and inclusion. Our most recent AI innovation, Experian Assistant, is redefining how financial organizations improve productivity with data-driven insights. Introducing Experian Assistant Experian Assistant, a new GenAI tool announced in October at Money20/20 in Las Vegas, is helping organizations take their productivity to the next level by drastically speeding up the modeling lifecycle. To drive automation and greater intelligence for Experian partners, Experian Assistant enables users to interact with a virtual assistant in real time and offers customized guidance and code generation for our suite of software solutions. Our experts – Senior Director of Product Management Ankit Sinha and Director of Analyst Relations Erin Haselkorn – recently revealed the details of how Experian Assistant can cut down model-development timelines from months to days, and in some cases even hours. The webinar, which took place on November 7th, covered a wide range of features and benefits of the new tool, including: Spending less time writing code Enhancing understanding of data and attributes Accelerating time to value Improving regulatory compliance A case study in building models faster Continental Finance Company, LLC’s Chief Data Scientist shared their experience using Experian Assistant and how it has improved their organization’s modeling capabilities: “With Experian Assistant, there is a lot of efficiency and improvement in productivity. We have reduced the time spent on data building by almost 75%, so we can build a model much quicker, and the code being generated by Experian Assistant is very high quality, enabling us to move forward much faster.” For businesses looking to accelerate their modeling lifecycle and move more quickly with less effort, Experian Assistant provides a unique opportunity to significantly improve productivity and efficiency. Experian Assistant tech showcase Did you miss the Experian Assistant Tech Showcase webinar? Watch it on demand here and visit our website to learn more. Visit our website [1] Forrester’s Q2 AI Pulse Survey, 2024

How can lenders ensure they’re making the most accurate and fair lending decisions? The answer lies in consistent model validations. What are model validations? Model validations are vital for effective lending and risk-based pricing programs. In addition to helping you determine which credit scoring model works best on your portfolio, the performance (odds) charts from validation results are often used to set score cutoffs and risk-based pricing tiers. Validations also provide the information you need to implement a new score into your decisioning process. Factors affecting model validations Understanding how well a score predicts behavior, such as payment delinquency or bankruptcy, enables you to make more confident lending decisions. Model performance and validation results can be impacted by several factors, including: Dynamic economic environment – Shifts in unemployment rates, interest rate hikes and other economic indicators can impact consumer behavior. Regulatory changes affecting consumers – For example, borrowers who benefited from a temporary student loan payment pause may face challenges as they resume payments. Scorecard degradation – A model that performed well several years ago may not perform as well under current conditions. When to perform model validations The Office of the Comptroller of the Currency’s Supervisory Guidance on Model Risk Management states model validations should be performed at least annually to help reduce risk. The validation process should be comprehensive and produce proper documentation. While some organizations perform their own validations, those with fewer resources and access to historical data may not be able to validate and meet the guidance recommendations. Regular validations support compliance and can also give you confidence that your lending strategies are built on solid, current data that drive better outcomes. Good model validation practices are critical if lenders are to continue to make data-driven decisions that promote fairness for consumers and financial soundness for the institution. Make better lending decisions If you’re a credit risk manager responsible for the models driving your lending policies, there are several things you can do to ensure that your organization continues to make fair and sound lending decisions: Assess your model inventory. Ensure you have comprehensive documentation showing when each model was developed and when it was last validated. Validate the scores you are using on your data, along with those you are considering, to compare how well each model performs and determine if you are using the most effective model for your needs. Produce validation documentation, including performance (odds) charts and key performance metrics, which can be shared with regulators. Utilize the performance charts produced from the validation to analyze bad rates/approval rates and adjust cutoff scores as needed. Explore alternative credit scoring models to potentially enhance your scoring process. As market conditions and regulations continue to evolve, model validations will remain an essential tool for staying competitive and making sound lending decisions. Ready to ensure your lending decisions are based on the latest data? Learn more about Experian’s flexible validation services and how we can support your ongoing success. Contact us today to schedule a consultation. Learn more

In today's data-driven business landscape, leveraging advanced targeting techniques is crucial for effective consumer engagement, particularly in the financial services sector. Prescreen targeting solutions have evolved significantly, offering a competitive edge through more precise and impactful outreach strategies. The power of data analytics and predictive modeling At the heart of modern prescreen targeting solutions lies the integration of extensive data analytics and predictive modeling. These systems combine detailed consumer information, including purchasing behaviors and credit scores, with sophisticated algorithms to identify potential customers most likely to respond positively to specific promotional campaigns. This approach not only streamlines campaign efforts but also enhances the tactical effectiveness of each interaction. Direct mail: a proven channel for financial services In the competitive North American financial services market, direct mail has demonstrated distinct advantages as a targeting channel. Its tangible nature helps cut through digital noise, capturing consumer attention effectively. For credit products, direct mail typically achieves engagement rates of 0.2-2% for prime consumers and 1-3% for near-prime and subprime consumers[1]. Key advantages of prescreen targeting solutions Enhanced response rates Custom response models can significantly boost prospect response rates by targeting a well-defined, high-propensity audience. These models have the potential to improve average response rates of prescreen direct mail campaigns by 10-25%. Risk mitigation By focusing on well-defined, high-propensity audiences, prescreen targeting via direct mail aims to attract the right prospects, minimizing fraud and delinquency risks. This targeted approach can lead to substantial savings on underwriting costs. Improved customer engagement and retention Personalized direct mail strengthens customer relationships by making recipients feel valued, leading to higher engagement and loyalty – crucial factors for long-term business success. Regulatory compliance and security Prescreen solutions come equipped with compliance safeguards, simplifying adherence to industry regulations and consumer privacy standards. This is particularly critical in the highly regulated financial sector. The future of targeting and enhancement As markets continue to evolve, the strategic importance of precise and efficient marketing techniques will only grow. Financial institutions leveraging optimized prescreen targeting and enhancement solutions can gain a significant competitive advantage, achieving higher immediate returns and fostering long-term customer loyalty and brand strength. Future advancements in AI and machine learning are expected to further refine prescreen targeting capabilities, offering even more sophisticated tools for marketers to engage effectively with their target audiences. Ascend Intelligence Services™ Target Ascend Intelligence Services Target is a sophisticated prescreening solution that boosts direct mail response rates. It uses comprehensive trended and alternative data, capturing credit and behavior patterns to iterate through direct mail response models and mathematical optimization. This enhances the target strategy and maximizes campaign response, take-up rates, and ROI within business constraints. Visit our website to learn more [1] Experian Research, Data Science Team, July 2024

In 2023, mobile fraud attacks surged by over 50%.1 With people relying more on mobile devices for day-to-day activities, like banking, shopping and healthcare, fraudsters have found new ways to exploit mobile security. With phones housing such sensitive data, how can businesses ensure that the person on the other end of a mobile device is who they claim to be? Enter mobile identity verification, a process designed to protect consumers and businesses in today’s mobile-driven world. Understanding mobile identity Mobile identity refers to the digital identity associated with a mobile device. This includes information like phone numbers, SIM cards, device IDs and user credentials that uniquely identify a person or device. Verifying that the mobile identity belongs to the correct individual is crucial for secure digital transactions. What is mobile identity verification? Mobile identity verification confirms the legitimacy of users accessing services via their mobile device. This process uses personal data, biometrics and mobile network information to authenticate identity, ensuring businesses interact with real customers without unnecessary friction. Why is mobile identity verification important? The rise of mobile banking, mobile payments and other mobile-based services has increased the need for robust security measures. Cybercriminals have found ways to exploit the mobile ecosystem through SIM swapping, phishing and other fraud tactics. This makes mobile identity verification critical for businesses looking to protect sensitive customer data and prevent unauthorized access. Here are some of the key reasons why mobile identity verification is essential: Preventing fraud: Identity theft and fraud are major concerns for businesses and consumers alike. Mobile identity verification helps to reduce the risk of fraud by ensuring that the user is who they say they are. Enhancing user trust: Customers are more likely to trust a service that prioritizes their security. Businesses that implement mobile identity verification solutions provide an extra layer of protection, which can help build customer confidence. Regulatory compliance: Many industries, including finance and healthcare, are subject to strict regulations concerning data privacy and security. Mobile identity verification helps businesses meet these regulatory requirements by offering a secure way to verify customer identities. Improving user experience: While security is essential, businesses must also ensure that they do not create a cumbersome user experience. Mobile identity verification solutions offer a quick and seamless way for users to verify their identities without sacrificing security. This is especially important for onboarding new users or completing transactions quickly. How does mobile identity verification work? Mobile identity verification involves a combination of different techniques and technologies, depending on the service provider and the level of security required. Some common methods include: Biometric authentication: Biometrics like fingerprint scans, facial recognition and voice recognition are becoming increasingly popular for verifying identities. These methods are secure and convenient for users since they don't require remembering passwords or PINs. SMS-based verification: One-time passwords (OTPs) sent via SMS to a user's mobile phone are still widely used. This method links the verification process directly to the user's mobile device, ensuring that they have possession of their registered phone number. Device-based verification: By analyzing the unique identifiers of a mobile device, such as IMEI numbers, businesses can confirm that the device is registered to the user attempting to access services. This helps prevent fraud attempts from unregistered or stolen devices. Mobile network data: Mobile network operators have access to valuable information, such as the user’s location, SIM card status and network activity. By leveraging this data, businesses can further verify that the user is legitimate and actively using their mobile network as expected. Behavioral analytics: By analyzing patterns in user behavior — such as typing speed, navigation habits, and interactions with apps — mobile identity verification solutions can detect anomalies that might indicate fraudulent activity. For instance, if a user’s behavior demonstrates low-to-no familiarity with the PII they provide, it can trigger an additional layer of verification to ensure security. The role of identity solutions in mobile identity verification Mobile identity verification is just one part of a broader range of identity solutions that help businesses authenticate users and protect sensitive data. These solutions not only cover mobile devices but extend to other digital touchpoints, ensuring that organizations have a holistic, multilayered approach to identity verification across all channels. Companies that provide comprehensive identity verification solutions can help organizations build robust security infrastructures while offering seamless customer experiences. For instance, Experian offers cutting-edge solutions designed to meet the growing demand for secure and efficient identity verification and authentication. These solutions can significantly reduce fraud and improve customer satisfaction. The growing importance of digital identity In the digital age, managing and verifying identities extends beyond traditional physical credentials like driver’s licenses or social security numbers. Digital identity plays an essential role in enabling secure online transactions, personalizing user experiences and protecting individuals' privacy. However, with great convenience comes great responsibility. Businesses need to strike a balance between security and personalization to ensure they protect user data while still offering a smooth customer experience. As mobile identity verification becomes more widespread, it’s clear that safeguarding digital identity is more important than ever. To learn more about the importance of digital identity and how businesses can find the right balance between security and personalization, check out this article: Digital identity: finding the balance between personalization and security. How Experian can help Experian is at the forefront of providing innovative identity verification solutions that empower businesses to protect their customers and prevent fraud. With solutions tailored for mobile identity verification, businesses can seamlessly authenticate users while minimizing friction. Experian’s technology integrates behavioral analytics, device intelligence and mobile network data to create a comprehensive and secure identity verification process. Whether you’re looking for a complete identity verification solution or need specialized mobile identity verification services, Experian’s identity verification and authentication solutions offer the solutions and expertise your organization needs to stay secure in the evolving digital landscape. Learn More 1 Kapersky This article includes content created by an AI language model and is intended to provide general information.

U.S. federal prosecutors have indicted Michael Smith of North Carolina for allegedly orchestrating a $10 million fraud scheme involving AI-generated music. Smith is accused of creating fake bands and using AI tools to produce hundreds of tracks, which were streamed by fake listeners on platforms like Spotify, Apple Music, and Amazon Music. Despite the artificial engagement, the scheme generated real royalty payments, defrauding these streaming services. This case marks the first prosecution of its kind and highlights a growing financial risk: the potential for rapid, large-scale fraud in digital platforms when content and engagement can be easily fabricated. A new report from Imperva Inc. highlights the growing financial burden of unsecure APIs and bot attacks on businesses, costing up to $186 billion annually. Key findings highlight the heavy economic burden on large companies due to their complex and extensive API ecosystems, often unsecured. Last year, enterprises managed about 613 API endpoints on average, a number expected to grow, increasing associated risks. APIs exposure to bot attacks Bot attacks, similar to those seen in streaming fraud, are also plaguing financial institutions. The risks are significant, weakening both security and financial stability. 1. Fraudulent transactions and account takeover Automated fraudulent transactions: Bots can perform high volumes of small, fraudulent transactions across multiple accounts, causing financial loss and overwhelming fraud detection systems. Account takeover: Bots can attempt credential stuffing, using compromised login data to access user accounts. Once inside, attackers could steal funds or sensitive information, leading to significant financial and reputational damage. 2. Synthetic identity fraud Creating fake accounts: Bots can be used to generate large numbers of synthetic identities, which are then used to open fake accounts for money laundering, credit fraud, or other illicit activities. Loan or credit card fraud: Using fake identities, bots can apply for loans or credit cards, withdrawing funds without intent to repay, resulting in significant losses for financial institutions. 3. Exploiting API vulnerabilities API abuse: Just as bots exploit API endpoints in streaming services, they can also target vulnerable APIs in financial platforms to extract sensitive data or initiate unauthorized transactions, leading to significant data breaches. Data exfiltration: Bots can use APIs to extract financial data, customer details, and transaction records, potentially leading to identity theft or data sold on the dark web. Bot attacks targeting financial institutions can result in extensive fraud, data breaches, regulatory fines, and loss of customer trust, causing significant financial and operational consequences. Safeguarding financial integrity To safeguard your business from these attacks, particularly via unsupervised APIs, a multi-layered defense strategy is essential. Here’s how you can protect your business and ensure its financial integrity: 1. Monitor and analyze data patterns Real-time analytics: Implement sophisticated monitoring systems to track user behavior continuously. By analyzing user patterns, you can detect irregular spikes in activity that may indicate bot-driven attacks. These anomalies should trigger alerts for immediate investigation. AI, machine learning, and geo-analysis: Leverage AI and machine learning models to spot unusual behaviors that can signal fraudulent activity. Geo-analysis tools help identify traffic originating from regions known for bot farms, allowing you to take preventive action before damage occurs. 2. Strengthen API access controls Limit access with token-based authentication: Implement token-based authentication to limit API access to verified applications and users. This reduces the chances of unauthorized or bot-driven API abuse. Control third-party integrations: Restrict API access to only trusted and vetted third-party services. Ensure that each external service is thoroughly reviewed to prevent malicious actors from exploiting your platform. 3. Implement robust account creation procedures PII identity verification solutions: Protect personal or sensitive data through authenticating someone`s identity and helping to prevent fraud and identity theft. Email and phone verification: Requiring email or phone verification during account creation can minimize the risk of mass fake account generation, a common tactic used by bots for fraudulent activities. Combating Bots as a Service: Focusing on intent-based deep behavioral analysis (IDBA), even the most sophisticated bots can be spotted, without adding friction. 4. Establish strong anti-fraud alliances Collaborate with industry networks: Join industry alliances or working groups that focus on API security and fraud prevention. Staying informed about emerging threats and sharing best practices with peers will allow you to anticipate new attack strategies. 5. Continuous customer and account monitoring Behavior analysis for repeat offenders: Monitor for repeat fraudulent behavior from the same accounts or users. If certain users or transactions display consistent signs of manipulation, flag them for detailed investigation and potential restrictions. User feedback loops: Encourage users to report any suspicious activity. This crowd-sourced intelligence can be invaluable in identifying bot activity quickly and reducing the scope of damage. 6. Maintain transparency and accountability Audit and report regularly: Offer regular, transparent reports on API usage and your anti-fraud measures. This builds trust with stakeholders and customers, as they see your proactive steps toward securing the platform. Real-time dashboards: Provide users with real-time visibility into their data streams or account activities. Unexplained spikes or dips can be flagged and investigated immediately, providing greater transparency and control. Conclusion Safeguarding your business from bot attacks and API abuse requires a comprehensive, multi-layered approach. By investing in advanced monitoring tools, enforcing strict API access controls, and fostering collaboration with anti-fraud networks, your organization can mitigate the risks posed by bots while maintaining credibility and trust. The right strategy will not only protect your business but also preserve the integrity of your platform. Learn more

In today’s digital age, call center fraud is a growing threat that businesses can no longer afford to ignore. As fraudsters become increasingly sophisticated, it’s crucial for companies to implement robust security measures to protect both their operations and their consumers. Various forms of call center fraud can have a significant impact on businesses. To prevent this, companies can use effective strategies including multifactor authentication solutions and account takeover prevention techniques. But first, what is call center fraud? Understanding call center fraud Call center fraud occurs when fraudsters exploit vulnerabilities in customer service operations to gain unauthorized access to sensitive information and commit identity theft. This type of fraud can take many forms, including social engineering, which occurs when a fraudster manipulates a call center agent into providing information or access, and phishing, which occurs when fraudsters use deceptive tactics to obtain confidential details from unsuspecting individuals. One of the most concerning tactics used by fraudsters is impersonation, or pretending to be legitimate consumers to gain access to accounts. Once they have access, they can make unauthorized transactions, change account details, or even take over the account entirely—a scenario known as an account takeover. The impact of these fraudulent activities can be devastating, leading to significant financial losses, damage to brand reputation, and a loss of consumer trust. Key strategies for preventing call center fraud According to recent research, account takeover fraud has increased by 330% in the past two years, projecting to cost $6.24 billion globally.[1] In addition, the number of U.S. consumers who have experienced account takeover has increased from 22% in 2021 to 29% in 2023.[2] To effectively combat call center fraud, businesses must adopt a multi-layered approach that includes advanced technological solutions, comprehensive employee training, and real-time monitoring. Here are some of the most effective strategies: 1. Implementing multifactor authentication (MFA) solutions One of the most effective ways to secure consumer interactions is by implementing multifactor authentication (MFA) solutions. MFA requires users to provide two or more verification factors to gain access to an account or complete a transaction. This adds an extra layer of security, making it significantly more difficult for fraudsters to succeed even if they have obtained some of the consumer’s information. MFA can be integrated into call center operations in several ways. For example, businesses can use voice recognition as a biometric factor, requiring consumers to verify their identity through a unique voiceprint. Other methods include sending a one-time code via text message, which the consumer must provide during the call, or using mobile app verification, where consumers approve transactions directly through their smartphones. 2. Account takeover prevention Account takeover is one of the most serious threats to call centers, as they involve fraudsters gaining control of a consumer’s account, often with disastrous consequences. To prevent account takeover, businesses can employ a combination of technological solutions and best practices. First, understanding what account takeover entails is crucial. It typically begins when a fraudster obtains some of the consumer’s personal information—often through phishing, social engineering, or a data breach. They then use this information to impersonate the consumer and convince call center agents to provide them with access to the account. To combat this, businesses can employ several account takeover prevention techniques. Anomaly detection systems can flag unusual activities, such as login attempts from unfamiliar locations or devices, prompting additional verification steps. Behavioral biometrics is another powerful tool, analyzing patterns in how users interact with their devices to detect inconsistencies that may indicate fraud. Continuous authentication, where the system continuously verifies the user’s identity throughout the session, is also effective in catching fraudsters in the act. 3. Training and awareness Technology alone may not be enough to entirely prevent call center fraud—human factors are equally important. Regular training for call center staff is essential to ensure team members can recognize and respond to potential fraud attempts. Employees should be trained to identify common tactics used by fraudsters, such as social engineering, and to follow strict verification procedures before providing any sensitive information. Awareness campaigns can also play a significant role in preventing fraud. Internally, companies should run regular campaigns to remind employees of the importance of adhering to security protocols. Externally, educating consumers about the risks of fraud and encouraging them to use security features like MFA can help reduce the likelihood of successful attacks. 4. Real-time monitoring and analytics Real-time monitoring is a critical component of an effective fraud prevention strategy. By continuously monitoring calls and transactions, businesses can quickly identify and respond to suspicious activities before they escalate. Advanced analytics tools, including voice analytics and behavior analysis, can provide valuable insights into potential fraud, allowing companies to take proactive measures. Voice analytics, for instance, can detect stress or hesitation in a caller’s voice, which may indicate that they are not who they claim to be. Behavior analysis can track how consumers typically interact with their accounts, flagging deviations from the norm as potential fraud. Continuous improvement is key here—regularly reviewing and updating monitoring protocols ensures that businesses stay ahead of evolving threats. Preventing call center fraud in your business By using a multi-layered fraud approach through a variety of authentication solutions, your business can quickly detect call center fraud without disrupting your consumers’ experience. Identify the risk Identity-based risk detection can pinpoint when a specific identity may be in the hands of fraudsters. Device intelligence solutions can recognize the risk associated with a specific device used to attempt online access. Address the risk Knowledge-based authentication (KBA) can quickly authenticate users by asking questions only they can answer, which can deter fraudsters. MFA services can generate and deliver a one-time password to a consumer’s mobile device to verify their identity in real time. Document verification allows your business to collect and verify images of identity documents uploaded from a consumer’s mobile device. Protect your business and your consumers from call center fraud Call center fraud is a significant threat that requires a proactive and comprehensive approach to prevention. By implementing strategies such as multifactor authentication solutions, account takeover prevention techniques, and robust employee training, businesses can significantly reduce their risk of falling victim to fraud. In today’s fast-paced digital world, staying vigilant and proactive is the key to safeguarding your call center against fraud. Act now to protect your business and maintain the trust of your consumers. Enable your call center to detect risk quickly and effectively with our robust fraud prevention solutions. Get started Download our identity and fraud report This article includes content created by an AI language model and is intended to provide general information. [1] Worldmetrics.org, Account Takeover Statistics: Losses to Reach $6.24 Billion Globally, 2024. [2] Security.org, Account Takeover Incidents are Rising: How to Protect Yourself in 2024.